Yang Z, Dhingra B, He K, et al. Glomo: Unsupervisedly learned relational graphs as transferable representations[J]. arXiv preprint arXiv:1806.05662, 2018.

1. Overview

1.1. Motivation

- most existing methods mainly focus on learning generic feature vector, ignore more structured graphical representation

In this paper, it proposed GLOMO (graph from low-level unit modeling)

- learning generic latent relational graphs that capture dependencies between pairs of data units

- experiments on question answer, natural language inference, semantic analysis, and image classification

2. Methods

Training

- graph predictor g. produce set of graphs G = g(x)

- graphs G. LxTxT

- L. number of layers

Testing

- G = g(x’)

- G fed as input to the downstream network to augment training, multiply G with task-specific features

2.1. Graph Predictor

- key CNN

- query CNN

2.2. Input of Feature Predictor

features

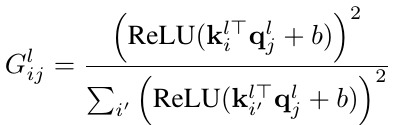

affinity maxtrix. G_l

- v. compositional function such as GRU

2.3. Object Function

- context prediction

2.4. Desiderata

- separate networks g and f

- employ a dquared ReLU to enforce sparse connections in the graphs

- hierarchical graph representation

2.5. Latent Graph Transfer

- product of all affinity matrices from the first layers to the l-th layer

- take a mixture of all the graphs

2.6. Implementation

- H. specific task feature

- M. mixture graph

- []. concat

- also adopt multi-head attention

3. Experiments